Building your own AI isn’t just a technological ambition—it’s a strategic imperative for enterprises looking to gain a competitive edge. Off-the-shelf solutions may offer convenience, but they can’t match the advantage of proprietary AI systems tailored to your unique business needs. From ensuring data sovereignty to enabling exclusive capabilities, custom AI development allows organizations to take full control of their innovation journey.

However, building enterprise AI solutions requires more than vision; it demands robust infrastructure, skilled teams, and a clear integration strategy. The rewards? Long-term ROI, unmatched scalability, and the ability to stay ahead in a rapidly evolving marketplace.

This guide unpacks the essential steps to create your own AI, equipping you with the insights needed to navigate technical requirements, manage costs, and achieve enterprise-grade excellence. Let’s explore how to transition from AI adoption to AI ownership.

Key Takeaways

- Strategic Advantage: Building proprietary AI solutions offers enterprises unique competitive advantages through customized capabilities and complete data sovereignty, distinguishing them from competitors using off-the-shelf solutions.

- Infrastructure Requirements: Successful custom AI development demands robust technical infrastructure, including scalable computing resources, data storage systems, and specialized development environments tailored to enterprise needs.

- Integration Framework: Custom AI solutions must seamlessly connect with existing enterprise systems through a carefully planned integration architecture that preserves legacy functionality while enabling new capabilities.

- Cost Considerations: While initial investment in custom AI development is substantial, enterprises can expect long-term ROI through reduced licensing costs, improved operational efficiency, and proprietary intellectual property.

- Development Timeline: Building enterprise AI solutions typically requires 12-18 months for full implementation, including planning, development, testing, and deployment phases.

- Data Sovereignty: In-house AI development ensures complete control over sensitive data and algorithms, addressing critical security and compliance requirements for regulated industries.

- Scalability Planning: Custom AI architectures must be designed with future growth in mind, incorporating flexible frameworks that can expand alongside enterprise needs.

- Talent Requirements: Successful implementation depends on assembling specialized teams with expertise in AI development, data science, and enterprise architecture.

- Risk Management: Building proprietary AI systems requires comprehensive risk assessment and mitigation strategies, including regular security audits and compliance monitoring.

In today’s competitive business landscape, the ability to build your own AI represents a strategic advantage for forward-thinking enterprises. Custom AI development allows organizations to create solutions precisely aligned with their unique challenges and opportunities. This comprehensive guide explores the essential aspects of developing proprietary AI systems, from strategic planning to implementation and scaling.

Understanding the Build Your Own AI Approach

The build your own AI approach involves developing custom artificial intelligence solutions tailored to specific business requirements rather than relying solely on off-the-shelf products. This strategy enables organizations to create proprietary AI systems that address unique challenges while maintaining control over intellectual property and data.

At its core, custom AI development requires a blend of domain expertise, technical capabilities, and strategic vision. Organizations pursuing this path typically seek competitive differentiation through AI capabilities that competitors cannot easily replicate. The approach spans a spectrum from modifying existing open-source frameworks to building comprehensive AI systems from the ground up.

According to recent industry data, companies that successfully implement custom AI solutions report a 35% improvement in operational efficiency compared to those using generic AI tools. This performance gap highlights the value of tailored solutions that precisely match business processes and objectives.

The decision to build versus buy depends on several factors including technical requirements, available resources, and strategic goals. While ready-to-use AI solutions offer convenience, they often lack the specificity needed for complex enterprise challenges. Custom development provides the flexibility to create AI systems that evolve with your business and integrate seamlessly with existing infrastructure.

Before embarking on a custom AI journey, organizations should conduct a thorough assessment of their AI readiness, including data availability, technical capabilities, and organizational alignment. This foundation ensures that the development process addresses genuine business needs rather than pursuing AI for its own sake.

Strategic Planning for Custom AI Development

Effective custom AI development begins with comprehensive strategic planning that aligns AI initiatives with core business objectives. This planning phase establishes the foundation for successful implementation and long-term value creation.

Start by identifying specific business problems that AI can address. The most successful enterprise AI solutions target well-defined challenges with measurable outcomes. Document these use cases with clear success metrics to guide development and evaluate performance.

Conduct a thorough assessment of your data ecosystem, as high-quality data forms the backbone of effective AI systems. This assessment should evaluate data availability, quality, accessibility, and governance. Many organizations discover significant data gaps during this process that must be addressed before development begins.

Develop a realistic resource plan that accounts for both technical and human requirements. Building AI in-house typically requires data scientists, machine learning engineers, domain experts, and project managers. Organizations must decide whether to build these capabilities internally, partner with external specialists, or pursue a hybrid approach.

A Fortune 500 manufacturing company recently reduced product defects by 47% after implementing a custom computer vision system designed specifically for their production lines. Their success stemmed from meticulous planning that included detailed process mapping and collaboration between AI specialists and production engineers.

Create a phased implementation roadmap with clear milestones and decision points. This approach allows for iterative development and provides opportunities to validate assumptions before committing additional resources. The roadmap should include provisions for testing, validation, and continuous improvement.

Defining Business Requirements and Use Cases

Successful proprietary AI systems begin with precise business requirements that translate organizational needs into technical specifications. This critical step bridges the gap between business challenges and AI capabilities.

Document specific use cases with detailed descriptions of current processes, pain points, and desired outcomes. Each use case should include quantifiable metrics that define success, such as cost reduction percentages, time savings, or quality improvements. These metrics provide objective criteria for evaluating AI performance.

Prioritize use cases based on business impact, technical feasibility, and resource requirements. This prioritization helps organizations focus on high-value opportunities while building momentum through early wins. Start with projects that offer substantial benefits with manageable complexity.

Involve stakeholders from across the organization in requirements gathering to ensure a comprehensive understanding of business needs. This collaborative approach helps identify hidden requirements and builds organizational buy-in for the AI initiative.

A healthcare provider identified medication reconciliation as a high-priority use case for custom AI development after discovering that manual processes consumed over 15,000 clinical hours annually. By clearly defining requirements and success metrics, they developed an AI solution that reduced processing time by 73% while improving accuracy.

Create detailed functional and non-functional requirements that will guide development efforts. Functional requirements describe what the system should do, while non-functional requirements address performance, security, scalability, and other quality attributes. These specifications form the foundation for system design and testing.

Assessing Technical Capabilities and Resources

Before embarking on custom AI development, organizations must honestly assess their technical capabilities and resource availability. This evaluation helps identify gaps and informs build-versus-buy decisions for different components of the AI solution.

Evaluate your organization’s expertise in key areas, including data engineering, machine learning, software development, and domain knowledge. This assessment should identify both strengths to leverage and weaknesses to address through hiring, training, or partnerships.

Consider your computing infrastructure requirements, including processing power, storage capacity, and networking capabilities. Building sophisticated AI models often requires specialized hardware such as GPUs or TPUs, particularly for training complex neural networks. Determine whether on-premises infrastructure, cloud resources, or a hybrid approach best suits your needs.

Assess your data readiness by examining the quantity, quality, and accessibility of relevant data. Many AI projects stall due to data limitations discovered after development begins. Conduct a thorough data audit to identify gaps and develop strategies for data collection, cleaning, and preparation.

According to a recent McKinsey survey, organizations that accurately assessed their AI capabilities before beginning development were 2.3 times more likely to successfully implement their solutions within budget and timeline constraints.

Develop a realistic budget that accounts for all aspects of AI development, including personnel, infrastructure, tools, and ongoing maintenance. The cost of developing proprietary AI systems varies widely based on complexity, but organizations should plan for both initial development and long-term support.

Technical Foundations for Building Your Own AI

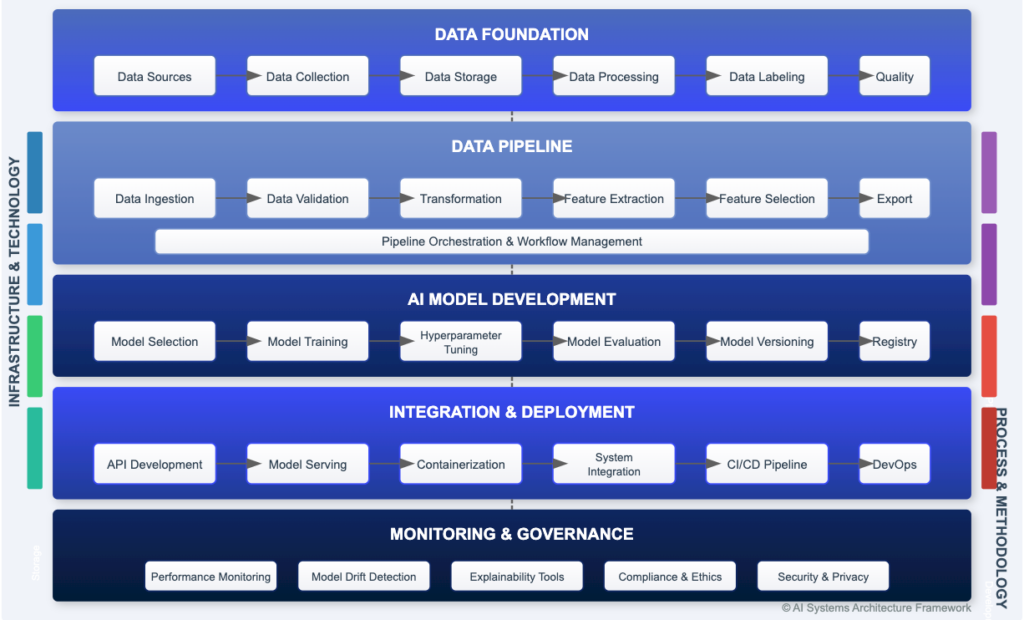

Establishing solid technical foundations is essential for building scalable AI solutions in-house. These foundations encompass the infrastructure, frameworks, and architectural decisions that support successful AI development and deployment.

Select appropriate AI frameworks and libraries based on your specific use cases and technical requirements. Popular options include TensorFlow, PyTorch, and scikit-learn, each with distinct strengths for different applications. Your choice should consider factors such as performance, community support, and compatibility with existing systems.

Design a flexible AI infrastructure that can scale with growing data volumes and computational demands. This infrastructure should support the entire AI lifecycle, from data ingestion and preprocessing to model training, evaluation, and deployment. Cloud platforms offer scalable resources that can adapt to changing requirements without significant upfront investment.

Implement robust data pipelines that ensure consistent, high-quality data flows to AI systems. These pipelines should include mechanisms for data validation, transformation, and versioning. Effective data management forms the foundation for reliable AI performance and facilitates model retraining as new data becomes available.

A financial services firm built a custom fraud detection system that processes over 10 million transactions daily with 99.7% accuracy. Their success stemmed from a modular architecture that separated data processing, feature engineering, and model inference components, allowing each to scale independently as transaction volumes grew.

Establish comprehensive monitoring and observability capabilities that provide visibility into AI system performance. These capabilities should track both technical metrics (such as response times and resource utilization) and business outcomes (such as prediction accuracy and business impact). Effective monitoring enables proactive maintenance and continuous improvement.

Selecting the Right AI Development Frameworks

Choosing appropriate frameworks and tools is a critical decision when building your own AI. These technologies form the foundation of your development process and significantly impact productivity, performance, and maintainability.

Evaluate machine learning frameworks based on your specific use cases, team expertise, and performance requirements. TensorFlow offers a comprehensive ecosystem with strong production deployment capabilities, while PyTorch provides flexibility and ease of debugging, which many researchers prefer. For simpler applications, scikit-learn offers accessible implementations of common algorithms.

Consider specialized frameworks for specific AI domains. For natural language processing, Hugging Face’s Transformers library provides state-of-the-art models and tools. Computer vision applications might benefit from frameworks like OpenCV or specialized deep learning libraries. These domain-specific tools can accelerate development and improve results.

Assess the maturity and community support for potential frameworks. Active communities provide valuable resources, including documentation, tutorials, and pre-trained models. Community size and engagement also indicate long-term viability, reducing the risk of investing in technologies that may become obsolete.

A retail company reduced its recommendation engine development time by 60% by selecting a framework with extensive pre-trained models and transfer learning capabilities. This decision allowed them to focus on customizing models for their specific product catalog rather than building capabilities from scratch.

Evaluate integration capabilities with your existing technology stack. The selected frameworks should work seamlessly with your data storage systems, deployment platforms, and monitoring tools. This integration minimizes friction during development and deployment while leveraging existing investments.

Data Requirements and Management

Effective data management forms the cornerstone of successful custom AI development. Organizations must establish comprehensive strategies for data collection, preparation, and governance to support their AI initiatives.

Identify and prioritize data sources based on relevance to your AI objectives. These sources may include internal systems, third-party providers, public datasets, or newly collected information. Document data lineage and quality characteristics for each source to inform preprocessing requirements and reliability assessments.

Implement robust data preprocessing pipelines that transform raw data into formats suitable for AI model training. These pipelines should address common challenges, including missing values, outliers, inconsistent formats, and feature engineering. Automated preprocessing ensures consistency between training and production environments.

Establish data governance policies that address privacy, security, and compliance requirements. These policies should define data access controls, retention periods, and usage limitations. Proper governance is particularly important when working with sensitive information such as personal data or proprietary business information.

Research indicates that organizations with mature data management practices achieve AI implementation success rates 30% higher than those with ad hoc approaches. This advantage stems from higher data quality and availability throughout the AI lifecycle.

Create a strategy for managing data drift and model performance over time. As real-world conditions change, the relationship between input data and desired outcomes may shift, reducing model effectiveness. Regular monitoring and retraining processes help maintain performance despite changing conditions.

Computing Resources and Infrastructure

Developing proprietary AI systems requires appropriate computing resources and infrastructure to support model training, testing, and deployment. These technical foundations determine development speed, model complexity, and operational performance.

Assess your computing requirements based on model complexity, data volume, and performance expectations. Deep learning models, particularly those involving computer vision or natural language processing, often require specialized hardware such as GPUs or TPUs for efficient training. Simpler machine learning algorithms may run effectively on standard CPU infrastructure.

Consider cloud-based infrastructure for flexibility and scalability. Cloud platforms provide on-demand access to specialized AI hardware without significant upfront investment. This approach allows organizations to scale resources based on current needs and experiment with different configurations before committing to specific hardware.

Implement containerization and orchestration technologies such as Docker and Kubernetes to ensure consistent environments across development, testing, and production. These technologies simplify deployment and scaling while reducing environment-related issues that can complicate AI development.

A manufacturing company reduced its model training time from days to hours by implementing a hybrid infrastructure approach. They maintained sensitive data on-premises while leveraging cloud resources for computationally intensive training jobs, achieving both security and performance objectives.

Develop a strategy for model serving that addresses performance, scalability, and reliability requirements. Options range from simple REST APIs to specialized model serving platforms that optimize inference performance and support advanced features such as A/B testing and canary deployments. The implementation of AI process automation depends heavily on efficient model serving capabilities.

Building and Training Custom AI Models

The core of custom AI development involves building and training models that address specific business challenges. This process requires a systematic approach to model selection, development, and evaluation.

Begin by selecting appropriate model architectures based on your specific use cases and data characteristics. Different problems require different approaches—classification tasks might use random forests or neural networks, while time series forecasting might employ ARIMA models or recurrent neural networks. Match model complexity to available data and performance requirements.

Implement a structured development workflow that includes feature engineering, model training, hyperparameter tuning, and evaluation. This workflow should incorporate best practices such as train/validation/test splits and cross-validation to ensure reliable performance assessment. Document each step to enable reproducibility and knowledge sharing.

Apply transfer learning where appropriate to leverage pre-trained models and reduce development time. This approach is particularly valuable for domains such as computer vision and natural language processing, where foundation models provide powerful starting points for customization. Transfer learning can dramatically reduce data requirements and training time.

A healthcare organization developed a custom medical imaging analysis system that achieved 94% diagnostic accuracy by fine-tuning a pre-trained vision model on just 5,000 labeled examples—a fraction of the data typically required for training from scratch.

Establish rigorous evaluation protocols that assess models against both technical metrics and business objectives. Technical metrics such as accuracy, precision, and recall provide insights into model performance, while business metrics connect model outputs to tangible outcomes such as cost savings or revenue growth. This dual evaluation ensures that technical excellence translates to business value.

Model Selection and Architecture Design

Selecting appropriate model architectures is a critical decision in custom AI development that balances complexity, performance, and resource requirements. This decision shapes development effort, data needs, and ultimate capabilities.

Match model types to specific problem characteristics. Classification problems might use logistic regression, random forests, or neural networks depending on data complexity. Regression tasks could employ linear models, gradient boosting, or deep learning approaches. Natural language processing might require transformer architectures, while computer vision often leverages convolutional neural networks.

Consider interpretability requirements when selecting model architectures. Some applications, particularly in regulated industries or high-stakes decision contexts, require transparent models whose decisions can be explained and audited. Others may prioritize performance over interpretability. This balance influences the choice between highly interpretable models (such as decision trees) and complex “black box” approaches.

Evaluate the trade-offs between model complexity and practical constraints. More complex models may achieve higher theoretical performance but require more data, computing resources, and development time. They may also present challenges for deployment on resource-constrained platforms. Start with simpler models as baselines before investing in more sophisticated approaches.

A retail banking institution initially planned to implement a complex deep learning system for credit risk assessment but discovered that a gradient boosting model achieved comparable accuracy with faster training times and greater interpretability. This simpler approach accelerated their deployment timeline by three months.

Design a modular neural network architecture that separates different functional components. This approach facilitates experimentation, reuse, and maintenance. For complex systems, consider ensemble methods that combine multiple models to achieve higher performance and reliability than individual models alone.

Training Methodologies and Best Practices

Effective training methodologies are essential for developing high-performing proprietary AI systems. These approaches ensure that models learn effectively from available data while avoiding common pitfalls such as overfitting.

Implement rigorous data splitting strategies that separate training, validation, and test datasets. This separation prevents data leakage and provides reliable performance estimates. For time-series data, ensure that splits respect temporal ordering. For structured data with potential dependencies, consider stratified sampling to maintain representative distributions across splits.

Apply systematic hyperparameter tuning to optimize model performance. Approaches range from grid search and random search to more sophisticated methods such as Bayesian optimization. Document the search process and results to build organizational knowledge about effective configurations for different problem types.

Address class imbalance through appropriate techniques such as resampling, synthetic data generation, or specialized loss functions. Many real-world problems involve imbalanced data distributions that can bias models toward majority classes. Targeted approaches ensure that models perform well across all classes, including rare but important cases.

Research from Stanford’s AI Index Report indicates that organizations implementing systematic training methodologies achieve model performance improvements averaging 23% compared to ad hoc approaches. These improvements stem from more effective hyperparameter selection and better handling of data challenges.

Implement regularization techniques to prevent overfitting and improve generalization. Options include L1/L2 regularization, dropout, early stopping, and data augmentation. These approaches help models perform well on new data rather than simply memorizing training examples. The specific techniques depend on model type and data characteristics.

Model Evaluation and Validation

Rigorous evaluation and validation ensure that custom AI development efforts produce models that perform reliably in real-world conditions. This process connects technical performance to business impact.

Define comprehensive evaluation metrics aligned with business objectives. Select metrics that reflect the consequences of different types of errors in your specific context.

Implement cross-validation strategies appropriate for your data characteristics. K-fold cross-validation works well for many problems, while time series data might require temporal validation approaches. These strategies provide more reliable performance estimates than single train-test splits, particularly with limited data.

Conduct thorough error analysis to understand model limitations and identify improvement opportunities. Examine patterns in misclassified examples or prediction errors to reveal systematic weaknesses. This analysis often uncovers data quality issues, missing features, or modeling assumptions that require adjustment.

A financial services company discovered through error analysis that their fraud detection model performed poorly on transactions from specific geographic regions underrepresented in their training data. By addressing this bias, they improved overall detection rates by 17% while reducing false positives by 23%.

Validate models against business metrics and key performance indicators. Technical metrics provide important information about model behavior, but business metrics such as cost savings, revenue impact, or customer satisfaction ultimately determine success. This connection ensures that custom AI automation solutions deliver tangible value.

Implementing and Deploying Custom AI Solutions

Successful implementation and deployment transform promising AI models into operational systems that deliver business value. This phase requires careful planning, robust engineering practices, and effective change management.

Develop a comprehensive implementation plan that addresses technical, operational, and organizational aspects of deployment. This plan should include infrastructure requirements, integration points with existing systems, testing protocols, and rollout strategies. Consider phased approaches that allow for controlled validation before full-scale deployment.

Implement robust MLOps practices that support the entire model lifecycle from development through deployment and monitoring. These practices include version control for code and models, automated testing, continuous integration/continuous deployment pipelines, and monitoring systems. Effective MLOps reduces deployment risks and enables rapid iteration.

Design integration points with existing business systems to ensure seamless data flow and user experience. These integrations may include APIs, event-driven architectures, or direct database connections depending on system requirements and organizational standards. Well-designed integrations minimize disruption while maximizing value.

A logistics company achieved a 99.8% availability rate for its route optimization AI by implementing comprehensive deployment automation, including canary deployments and automated rollbacks. This approach allowed them to deploy updates weekly without service disruptions.

Develop a change management strategy that prepares users and stakeholders for new AI capabilities. This strategy should include communication plans, training programs, and feedback mechanisms. Effective change management increases adoption rates and helps identify improvement opportunities based on real-world usage.

Integration with Existing Systems

Integrating proprietary AI systems with existing business infrastructure requires careful planning and execution to ensure seamless operation and data flow. This integration connects AI capabilities to business processes and data sources.

Map integration requirements by identifying all systems that will interact with the AI solution. These may include data sources, business applications, authentication systems, and monitoring platforms. Document data formats, communication protocols, and performance requirements for each integration point.

Design appropriate interfaces based on system requirements and organizational standards. REST APIs provide flexible integration for many applications, while event-driven architectures support real-time processing needs. For high-performance requirements, consider gRPC or other optimized protocols. Match interface design to specific use cases and technical constraints.

Implement robust error handling and fallback mechanisms to maintain system reliability. AI components may experience temporary unavailability or performance degradation. Proper error handling ensures that dependent systems can respond appropriately without cascading failures. Consider circuit breaker patterns and graceful degradation strategies for critical integrations.

A retail organization integrated their custom product recommendation engine with their e-commerce platform through a microservices architecture that processed 50,000 requests per minute during peak shopping periods. Their design included caching layers and fallback recommendations that maintained 100% availability even during model retraining.

Develop comprehensive testing strategies that validate end-to-end functionality across integrated systems. These strategies should include unit tests, integration tests, performance tests, and user acceptance testing. Thorough testing identifies integration issues before they impact business operations.

Deployment Strategies and MLOps

Effective deployment strategies and MLOps practices ensure that custom AI development efforts translate into reliable, maintainable systems in production. These approaches bridge the gap between data science and operational excellence.

Implement containerization and orchestration technologies to ensure consistent environments across development, testing, and production. Docker containers package models with their dependencies, while Kubernetes or similar platforms manage deployment, scaling, and availability. This approach reduces “works on my machine” problems and simplifies deployment.

Establish automated CI/CD pipelines specifically designed for machine learning workflows. These pipelines should automate testing, validation, and deployment processes while maintaining traceability between data, code, and deployed models. Automation reduces deployment errors and accelerates the path from development to production.

Design deployment patterns appropriate for your risk tolerance and business requirements. Canary deployments expose new models to limited traffic before full rollout, while blue-green deployments maintain parallel environments for seamless switching. Shadow deployments run new models alongside existing ones for comparison without affecting production outcomes.

According to a recent DevOps Research and Assessment (DORA) report, organizations implementing mature MLOps practices deploy AI models 80% faster and experience 60% fewer production incidents than those using manual processes.

Implement comprehensive monitoring and observability for deployed models. This monitoring should track technical metrics (such as response times and resource utilization), model metrics (such as prediction distributions and feature importance), and business metrics (such as conversion rates or cost savings). Effective monitoring enables proactive management and continuous improvement of AI systems.

Monitoring and Maintenance

Ongoing monitoring and maintenance are essential for sustaining the performance and value of enterprise AI solutions over time. These activities ensure that AI systems continue to deliver business benefits despite changing conditions.

Implement comprehensive monitoring systems that track model performance, data quality, and business impact. Technical monitoring should include prediction distributions, feature importance, and confidence scores. Data monitoring should detect drift in input distributions or relationships. Business monitoring should connect model outputs to tangible outcomes.

Establish alerting thresholds and response protocols for different types of issues. Critical problems affecting business operations require immediate attention, while gradual performance degradation might trigger scheduled maintenance. Clear protocols ensure appropriate responses to different situations.

Develop systematic retraining processes that update models based on new data and changing conditions. These processes should include triggers for retraining (such as performance degradation or data drift), validation protocols for new models, and deployment procedures. Regular retraining maintains model relevance and performance.

A healthcare provider implemented automated monitoring for their patient risk prediction system that detected a 12% shift in feature importance patterns following a change in clinical protocols. This early detection allowed them to retrain their model before performance degradation affected patient care.

Create comprehensive documentation that supports long-term maintenance and knowledge transfer. This documentation should cover model architecture, training procedures, data dependencies, deployment processes, and known limitations. Thorough documentation ensures that AI systems remain maintainable even as team members change.

Scaling and Evolving Your Custom AI

Scaling and evolving proprietary AI systems enable organizations to expand capabilities, improve performance, and adapt to changing business needs. This ongoing process transforms initial AI implementations into strategic assets that drive long-term value.

Develop a roadmap for AI capability expansion that aligns with business strategy and user feedback. This roadmap should identify opportunities to extend existing models to new use cases, incorporate additional data sources, or implement new AI techniques. Prioritize initiatives based on business impact, technical feasibility, and resource requirements.

Implement architectural patterns that support scalability across multiple dimensions. Horizontal scalability addresses increasing request volumes, while vertical scalability supports more complex models or larger datasets. Functional scalability enables the addition of new capabilities without disrupting existing functionality.

Establish processes for continuous learning and improvement based on production performance and user feedback. These processes should include mechanisms for collecting and analyzing feedback, prioritizing enhancements, and measuring the impact of improvement. Continuous learning ensures that AI systems evolve to meet changing needs.

A global manufacturer expanded their initial predictive maintenance AI from a single production line to 27 facilities worldwide over 18 months. Their modular architecture allowed for local customization while maintaining a consistent core, reducing implementation time for each new facility by 65%.

Create governance structures that balance innovation with stability as AI systems scale. These structures should define decision-making processes for changes, establish quality standards, and align technical decisions with business priorities. Effective governance enables controlled evolution while maintaining reliability.

Performance Optimization and Scaling

Optimizing and scaling custom AI development ensures that systems can handle growing demands while maintaining or improving performance. These efforts address both technical efficiency and business effectiveness.

Implement systematic performance profiling to identify bottlenecks in AI processing pipelines. Profiling should examine data preprocessing, model inference, and post-processing steps to pinpoint optimization opportunities. Focus initial efforts on components that consume the most resources or create processing delays.

Apply model optimization techniques appropriate for your deployment environment and performance requirements. Options include quantization (reducing numerical precision), pruning (removing unnecessary connections in neural networks), and knowledge distillation (creating smaller models that mimic larger ones). These techniques can dramatically reduce resource requirements while maintaining accuracy.

Design scalable architecture patterns that support growing workloads. Microservices architectures enable independent scaling of different components, while serverless approaches automatically adjust resources based on demand. Caching strategies can reduce computation needs for frequent or similar requests.

A financial services company reduced their fraud detection model’s inference time by 78% through a combination of model pruning and GPU acceleration, enabling real-time screening of transactions without increasing infrastructure costs despite a 40% growth in transaction volume.

Implement horizontal scaling capabilities that distribute workloads across multiple computing resources. This approach supports higher request volumes and provides redundancy for improved reliability. Effective load balancing ensures efficient resource utilization across distributed components.

Continuous Improvement and Iteration

Continuous improvement processes ensure that enterprise AI solutions evolve to meet changing business needs and incorporate new capabilities. This ongoing refinement maximizes long-term value and adaptability.

Establish feedback loops that capture insights from users, stakeholders, and system performance. These loops should include structured mechanisms for collecting, analyzing, and prioritizing feedback. Regular retrospectives help identify patterns and improvement opportunities across multiple feedback sources.

Implement A/B testing frameworks that enable controlled comparison of model variations. These frameworks allow organizations to validate improvements before full deployment and quantify performance differences. Systematic experimentation accelerates learning while managing risks.

Develop processes for incorporating new AI techniques and capabilities as they emerge. The AI field evolves rapidly, with new approaches regularly demonstrating improved performance or efficiency. Staying current with these developments helps organizations maintain competitive advantages and address previously unsolvable problems.

Research from MIT indicates that organizations with structured AI improvement processes achieve 3.5 times greater return on AI investments over five years compared to those implementing static solutions. This advantage compounds as improvements build upon previous enhancements.

Create knowledge management systems that capture insights, best practices, and lessons learned throughout the AI lifecycle. These systems help organizations avoid repeating mistakes, accelerate future development, and build institutional expertise. Effective knowledge management is particularly valuable as AI applications expand across the organization.

Future-Proofing Your AI Investment

Future-proofing ensures that investments in building your own AI deliver sustainable value despite technological changes and evolving business requirements. This strategic approach maximizes long-term returns while minimizing obsolescence risks.

Design modular architectures that separate concerns and enable component-level updates. This approach allows organizations to replace or enhance specific elements without rebuilding entire systems. Modularity supports incremental improvement and adaptation to new technologies or requirements.

Implement flexible data pipelines that can accommodate new data sources and formats. As organizations collect more data or access new external sources, these pipelines should adapt without major restructuring. Data flexibility ensures that AI systems can incorporate valuable new information as it becomes available.

Develop skills and knowledge across the organization to support ongoing AI evolution. This development should include technical capabilities for AI practitioners, domain knowledge for business stakeholders, and AI literacy for end users. Broad capability development reduces dependency on specific individuals and enables collaborative improvement.

A healthcare technology company designed their clinical decision support system with a modular architecture that allowed them to incorporate three major advances in medical AI research within 18 months of initial deployment, each time improving diagnostic accuracy without disrupting existing functionality.

Establish technology radar processes that systematically evaluate emerging AI capabilities and assess their potential value. These processes help organizations identify opportunities to incorporate beneficial new approaches while avoiding investments in unproven or misaligned technologies. Regular horizon scanning ensures awareness of developments that might impact AI strategy.

Future Trends in Build Your Own AI

The landscape of custom AI development continues to evolve rapidly, with emerging trends shaping how organizations approach building their own AI. Understanding these trends helps enterprises prepare for future opportunities and challenges.

Personalized AI development platforms are emerging that combine the flexibility of custom solutions with the accessibility of pre-built components. These platforms provide configurable building blocks that organizations can assemble and customize to address specific needs. This approach reduces development time while maintaining solution specificity.

Cross-domain knowledge integration is becoming increasingly important as AI systems address complex problems that span multiple domains. Future AI development will emphasize techniques for combining knowledge and models across traditionally separate fields. This integration enables more comprehensive solutions to multifaceted business challenges.

No-code and low-code AI customization tools are democratizing AI development by enabling domain experts to create and modify AI solutions without extensive programming knowledge. These tools provide visual interfaces for model configuration, data preparation, and deployment. As these tools mature, they will accelerate AI adoption across organizations.

A recent Gartner report predicts that by 2025, over 70% of new enterprise AI applications will be built using low-code or no-code platforms, compared to less than 30% in 2021. This shift will dramatically expand the pool of AI creators within organizations.

AI ethics self-regulation frameworks are emerging as organizations recognize the importance of responsible AI development. These frameworks provide structured approaches for addressing bias, fairness, transparency, and accountability throughout the AI lifecycle. Future custom AI development will increasingly incorporate these considerations as standard practice rather than afterthoughts.

Collaborative human-AI creation models are redefining development processes by positioning AI as an active participant in its own creation. These approaches leverage AI capabilities to assist with tasks such as feature selection, architecture design, and code generation. This collaboration combines human creativity and domain knowledge with AI’s analytical capabilities and pattern recognition.

The future of building your own AI will likely involve hybrid approaches that combine custom development with pre-built components, automated tools with human expertise, and internal capabilities with external partnerships. Organizations that develop flexible, adaptable strategies for AI development will be best positioned to leverage these emerging trends.

As AI technologies continue to evolve, the distinction between building and configuring AI systems will blur. Organizations will increasingly focus on creating unique AI capabilities through composition, customization, and domain-specific optimization rather than developing every component from scratch.

Any Other Questions?

That wraps up the most popular questions we get, but fire away with any others!

Contact us to discuss your next development project!

References

Frequently Asked Questions

Creating your own AI is akin to crafting a master blueprint, where you design and develop intelligent systems that automate tasks and enhance decision-making. Here’s what you need to consider:

- Customization: Building AI from scratch allows for tailoring it to specific business needs, such as **machine learning** or **natural language processing**.

- Development Tools: Tools like TensorFlow or PyTorch facilitate AI development by providing robust frameworks and libraries.

- Expertise Required: Creating AI often demands specialized knowledge in AI engineering and data science.

- Integration Challenges: Integrating custom AI systems into existing infrastructure can be complex.

For instance, companies like Google have developed AI from scratch, showcasing the potential of custom AI development in transforming business operations.

Building AI can be cost-effective, but it’s rarely free. Creating a robust AI system requires investment in resources and expertise. Here are some free or low-cost options:

- Open-Source Tools: Utilizing open-source AI frameworks like TensorFlow or Scikit-learn can reduce development costs.

- Free Trials and Demos: Many AI platforms offer free trials or demo versions, allowing users to test features before committing financially.

- No-Code Platforms: Platforms like GPTBots provide no-code solutions for building simple AI chatbots, minimizing the need for extensive coding knowledge.

- Crowdsourced Data: Leveraging crowdsourced data can help reduce the cost of AI model training.

For example, GPTBots offers a no-code interface for creating AI chatbots, making AI development more accessible without heavy financial investment.

Creating AI for free involves leveraging open-source tools and no-code platforms. Here’s how to get started:

- No-Code Platforms: Use platforms like GPTBots to build AI chatbots without needing to code.

- Free AI Tools: Tools like Google’s Teachable Machine allow users to create simple AI models without cost.

- Open-Source Libraries: Libraries like PyTorch or TensorFlow offer free access to AI frameworks.

- Public Datasets: Utilize publicly available datasets to train AI models without incurring data collection costs.

Open-source communities have been instrumental in creating free AI tools, as seen in frameworks like TensorFlow.

Creating a system equivalent to OpenAI involves developing sophisticated AI models like those used in language generation or machine vision. Here are the key steps:

- Advanced AI Models: Developing models comparable to OpenAI requires extensive expertise in deep learning and AI architecture.

- Large-Scale Training Datasets: Access to vast amounts of high-quality data is necessary to train robust AI systems.

- Significant Computational Resources: Training large AI models demands substantial computing power and infrastructure.

- Specialized Expertise: Creating AI systems like OpenAI requires a team with deep knowledge in AI engineering and natural language processing.

OpenAI itself is a pioneering example of what can be achieved by combining cutting-edge AI technology with large-scale data and computational resources.

FAQ References

Sprinklr: Steps to Build a Generative AI-Powered FAQ Bot

Toolsaday: Free AI FAQ Generator

Document360: Best AI Prompts For Creating FAQs

OpenAI Community: Creating FAQs on Internal Company Data

GPTBots: How to Build a FAQ Chatbot in 5 Minutes!